At ACL 2025, a premier convention for pure language processing (NLP), a paper on AI security vulnerabilities showcased a rigorous methodology and novel findings.

The paper ranked among the many high 8.2% of submissions. It launched Tempest, a framework that systematically compromises security boundaries in giant language fashions (LLMs) by pure conversations, reaching a 100% success fee on GPT-3.5-turbo and 97% on GPT-4.

However what made it outstanding was that an AI system performed the analysis referred to as Zochi, developed by the corporate Intology. A preliminary model of this work, beforehand generally known as Siege, was accepted on the workshops of The Worldwide Convention on Studying Representations.

Intology defines Zochi as an AI analysis agent able to autonomously finishing your entire scientific course of—from literature evaluation to peer-reviewed publication. The system operates by a multi-stage pipeline ‘designed to emulate the scientific methodology.’

So, are we heading in the direction of a Cursor second for scientific analysis publishing?

For context, AIM spoke to Raj Palleti, a researcher at Stanford College. Palleti stated AI fashions at the moment serve extra as assistants than co-scientists, very similar to coding instruments resembling Cursor or GitHub Copilot, quite than end-to-end techniques like Devin.

“That being stated, individuals are laborious at work pushing the frontiers of AI fashions in science,” he stated.

“One promising avenue is in areas of analysis that don’t require a bodily lab, like AI analysis,” Palleti famous, pointing in the direction of Intology’s work with Zochi.

Zochi, he recommended, represents a possible “Devin second” for scientific analysis. The system processes 1000’s of papers, identifies promising instructions, and uncovers non-obvious connections throughout disparate work.

This isn’t an remoted case. Earlier this yr, Anthropic’s Claude Opus was credited with contributing considerably to a analysis paper difficult one in all Apple’s research on reasoning fashions. The mannequin was stated to have executed the ‘bulk of the writing’.

In one other occasion, Japanese AI lab Sakana AI introduced the ‘AI Scientist V2’, an end-to-end agentic system able to producing peer-reviewed papers.

“We evaluated The AI Scientist V2 by submitting three absolutely autonomous manuscripts to a peer-reviewed ICLR workshop. Notably, one manuscript achieved excessive sufficient scores to exceed the typical human acceptance threshold, marking the primary occasion of a totally AI-generated paper efficiently navigating a peer overview,” stated Sakana AI in a report.

The system begins with concepts, conducts experiments in phases, explores variations by an agentic search tree, and robotically generates papers. It even makes use of a vision-language mannequin to overview figures and captions.

Nonetheless, limits stay. Intology burdened that whereas Zochi demonstrated absolutely autonomous analysis capabilities, the corporate manually reviewed the outcomes, verified the code, corrected minor errors, and wrote the rebuttal with out counting on the system. “The Zochi system will likely be supplied primarily as a analysis copilot designed to reinforce human researchers by collaboration,” Intology stated.

Palleti echoed the sentiment, noting that AI is most helpful for relieving repetitive duties. He stated writing papers is time-consuming, with researchers usually overloaded with citations and prolonged introductions. “AI can actually assist right here,” he stated. “With thought era, I can see AI being helpful as a soundboard for researchers to bounce concepts off. As for AI carrying the majority of the mental work, I’m extra sceptical.”

Ethics and Limitations

Even Sakana AI admits its AI Scientist V2 is finest fitted to workshops reporting preliminary work, because it doesn’t but meet the rigour of top-tier conferences. Their inside overview revealed hallucinations and a scarcity of methodological depth in some circumstances.

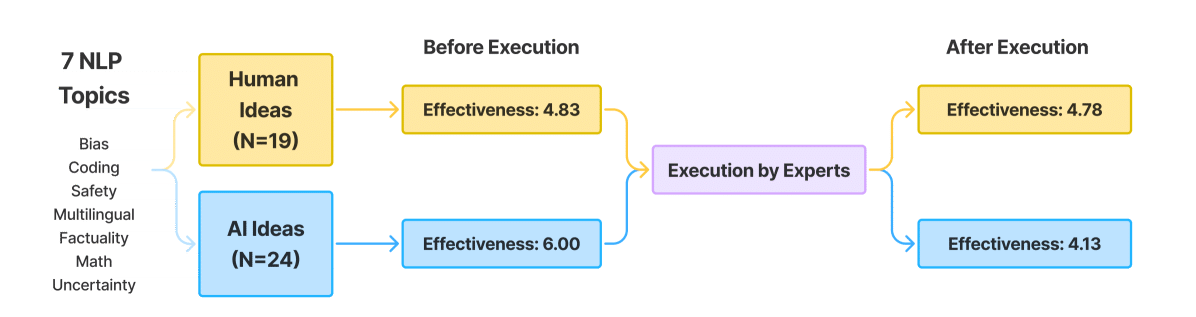

A latest Stanford examine additionally discovered that whereas LLM-generated analysis concepts could seem novel at first, their high quality drops considerably upon execution in contrast with human-generated concepts.

Ethics additionally stay contentious. When Claude Opus contributed to a paper, it was initially credited as an writer, however this was later revoked as a result of arXiv insurance policies prohibit itemizing AI instruments as authors. Sakana AI, in contrast, disclosed AI involvement to organisers and even declined an accepted paper to keep away from setting untimely precedents.

“We emphasise that the group has not but reached a consensus on integrating AI-generated analysis into formal scientific publications,” Sakana AI stated. “Cautious and clear experimentation is important at this preliminary stage.”

The put up Is It Time for the Vibe Researcher to Rise and Shine? appeared first on Analytics India Journal.

Leave a Reply